Approaching the year 2020, it's easy to realise we all live in a world in which more decisions are taken by algorithms, rather than by humans following established rules. Even those not having access or refusing to use computers are affected. As a direct result of this condition, people have no idea how algorithms affect their lives; they just know they do, sometimes. The space where rules are made is technological, not political. Algorithms can only be negotiated by specialists, then imposed top-down or introduced with a quick ceremony: a click on the agreement. Mere "users" have no power over the algorithms, nor comprehension of their rationality often hidden behind trade secrets.

Algorithms have an enormous power on those whom are treated like users: they can inflict injustice, induce affective and psychological breakdowns as well exploit their labour and need for liquidity.

Three main types of failures have been occurred historically so far:

-

False positives

-

Human psychosis

-

Alienated labour

In what follows we look closer at these three failure scenarios. We then point to solutions that are being be adopted by policy makers and activists, including the governance of an algorithm by its own participants (no longer users ) for which the term “algorithmic sovereignty” is proposed.

False Positives

An algorithm can be thought in simple terms: it processes large amounts of data according to its directives, then marks some samples as positive matches. This happens when an article in our social-media news-feed is marked as "relevant" for us, as well when a person is marked as a risk by software used by law enforcement. A false positive then is an error that occurs when the algorithm marks something or someone that was not really supposed to be marked, leading to the dissatisfaction of a reader in case of relevant articles or the unnecessary treatment of a person in case of risk assessment.

It is reasonable to consider the living world as an infinite source of unknowns: nothing is "absolutely secure", there is no "perfect system", the possibility of error is always there, only various degrees of accuracy may statistically lead to less errors. Also its important to consider that, in the living world, when a subject is a false positive, then the error affects the entire subject, is not just a "statistical fraction" of it. So to say, if we have 1 probability in a million to kill someone by mistake, when it occurs, we still have killed an innocent.

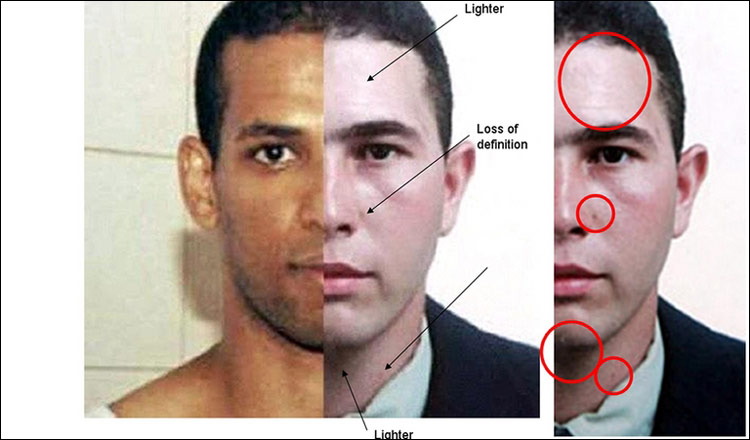

In case of a facial recognition programs, the tragic story of Jean Charles de Menezes is exactly this: shot in London at Stockwell tube station on 22 July 2005 by unknown specialist firearms’ officers, he was a "false positive". What is disturbing in this episode is not just that an innocent has been killed because of being confused with a terrorist by the growing apparatus of surveillance cameras; but also how law-enforcement officials made use of his image post-mortem on mainstream-media to justify of their error.

Illustration 1: Image aired by UK media commenting on the resemblance of the victim with a chased terroris

A bit later in 2008 the Security Service of Great Britain (MI5) decided to "mine" all information about public transportation: all private data about each person traveling in England is made available for computational analysis conducted by law enforcement agencies in order to find out hints and match patterns about terrorist activities. Just like England, many other countries have followed, tramping any legal obstacle and even constitutional laws. In the Netherlands the "sleepwet" referendum campaign managed to gather enough signatures for a referendum against this practice, then even win the referendum: nevertheless, the government went forward implementing this form of data-mining anyway.

While contemporary security research emphasizes on automatic pattern recognition in human behaviour, in a near future large-scale analysis can be exercised on the totality of data available and at unprecedented depths in the human brain. Yet algorithmic models fail to incorporate the risks of systemic failure: they can hardly contemplate the ethical costs of killing an innocent. To keep campaigning for the privacy of individuals is rather pointless at this point: the real stake is how our societies are governed, how we rate people's behavior, individuate deviance and act upon it.

Human psychosis

What we can observe today in contexts where algorithms are deployed is a condition of subjugation for which the living participants to these systems do not even share knowledge of the algorithms governing their spaces.

The logic of algorithms is often invisible, while only their results are manifest. The cognitive advantage or knowledge capital they offer is up for sale to advertisers that pay to be found. Cases like "Cambridge Analytica" have unveiled questionable practices of political manipulation exercised this way, through a hidden organising process to which the user is exposed.

This condition is now extended beyond search engines: for an individual participant hidden algorithms govern the rating of visibility on-line, of success dating someone, of the likelihood of receiving a loan, and just-in-time prices for everything being bought, matched to a subject's desires and needs.

Psychosis leads to tragedies as when on the 3rd of April 2018 Nasim Aghdam, a content creator who operated a number of channels covering art, music and veganism entered the YouTube headquarters in San Bruno, California, and opened fire on a number of YouTube employees, severely wounding three, before turning the gun on herself. Her claims were that changes in YouTube's rating algorithms were hindering her visibility and unjustly excluding her from her audience, claims that lead to a strong sense of injustice and self-destruction.

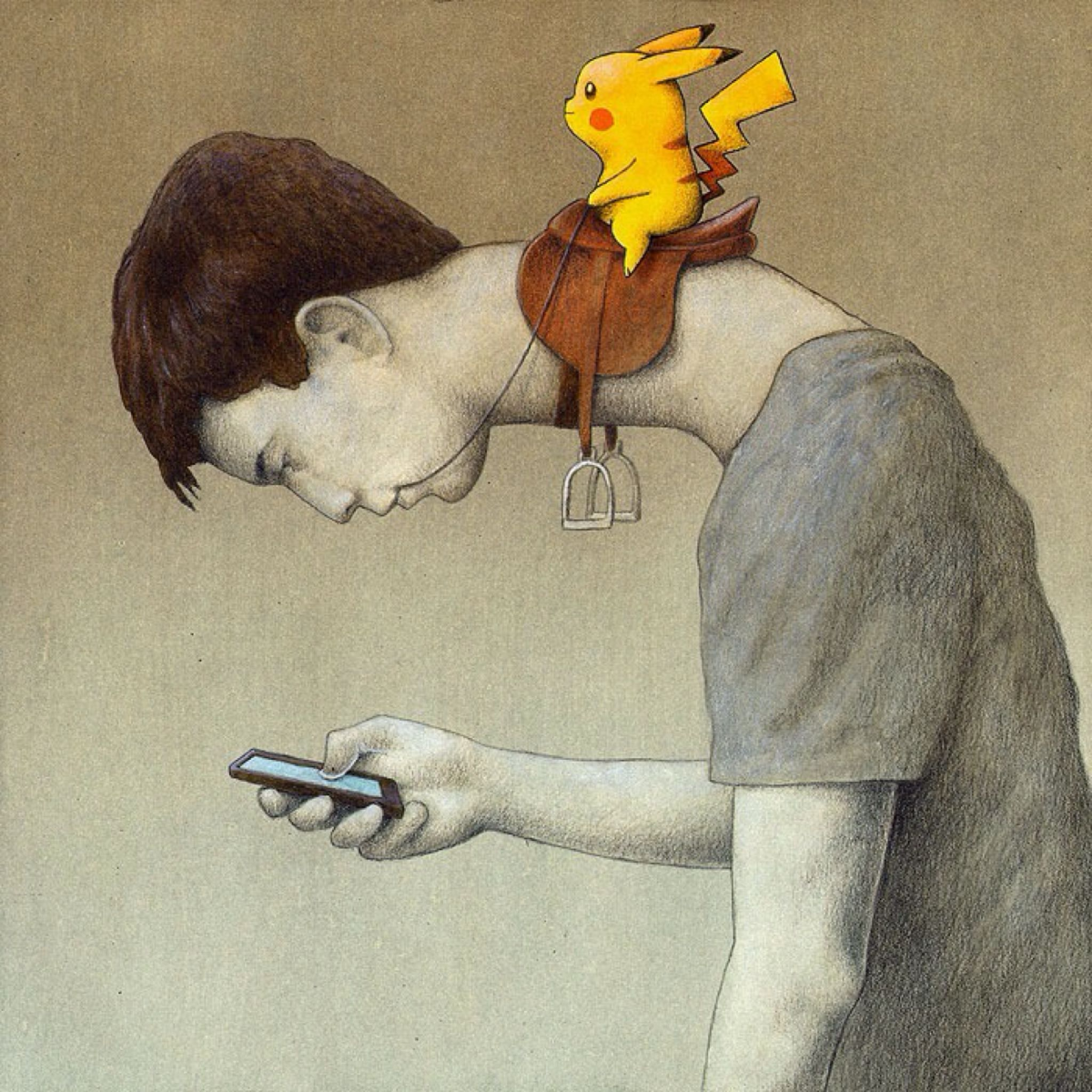

Psychopathology emerges in the breakdown of the barriers between lives lived excessively on screen and the external, sensory and emotional world. This is a dimension where injustice, prevarication and conflict as seen on a screen can be projected directly into real life, with dramatic consequences. Psychopathology stems from a negative, irrational, poorly-tuned response to the affective environment; and affect is directly linked by algorithms to the social and cultural expectation of participation in mediated environments where the logics of appreciation, remuneration and punishment are hidden.

Illustration 2: "Control Pokemon" by Pawel Kuczynski (2016)

Alienated Labour

Going beyond the affective dimension, it is human labour activity that nurtures algorithms, while being less and less considered labour itself. Human labour activity on-line is often alienated in the domain of games or social interaction and at the same time exploited for its value on numerous markets.

The so called "Platform Work" basically consists of online platforms using hidden algorithms to match the supply of and demand for paid labour. For the workers exploited by crowd-sourcing platforms there is no possibility to negotiate their own ratings, to understand and influence the algorithms that validate the work being done.

If we can consider the labor conditions of "platform workers" as analogue to what Italian Autonomous movements have called "precarious workers", it may be possible to facilite the trade-union consciousness of large and growing multitudes of workers. But even if today seems easier to organise and communicate with a large amount of people, it is still difficult to adopt platforms that facilitate the necessary independence, integrity and confidentiality for such communications.

The journey is still long and rather than a regression into the primitive refusal of algorithms, lets try to face a positive horizon of "humanisation of digital labour" encouraging cooperation, co-ownership and the democratic governance of algorithms.

Going beyond the empire of algorithmic profits

Historically, public institutions are an important bastion of rationality that transcends the mere logic of profit and strives for the peace and prosperity of society and the living world. As in similar struggles for food sovereignty, trade-unions, workers societies, social institutions have the important role of stewards of commons. Lets now imagine a new mission for them: to facilitate participants to know the rules of algorithmic systems, to facilitate democratic participation also through algorithms, to appeal algorithmic decisions and to grant human intervention on life-critical decisions.

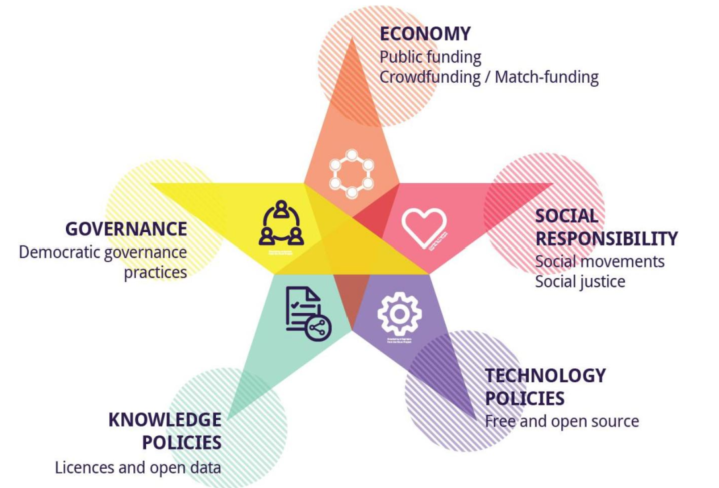

Our project DECODE goes in this direction following a technical and scientifical research path, calling for a new form of municipal rationality that contemplates technological sovereignty, citizen participation and ownership and algorithmic sovereignty.

This narrative is echoing through world's biggest municipal administrations as we speak: a stance against the colonization of dense settlements by complex technical systems that are far from the reach of citizen's political control. The "Manifesto in favour of technological sovereignty and digital rights for cities" is now being considered as a standard guideline for ethics in governance by many cities of the world.

At last, being a software developer myself, I'd like to call to action fellow programmers out there: we need to write code that is understandable by other humans and by animals, plants, all the living world we inoculate with our sensors and manipulate through automation. This is what we are trying to do with the development of Zencode making it possible to write smart-contracts in a language that most humans can understand. Good code is not what is skillfully crafted or most efficient, but what can be read by others, studied, changed, adapted. Let's adopt intuitive namespaces that can be easily matched with reality or simple metaphors, let's make sure that what we write is close to what we mean. Common understanding of algorithms is necessary, because their governance is an inter-disciplinary exercise and cannot be left in the hands of a technical elite.

Illustration 3: from a deliverable of the DECODE project on algorithms and governance.

It is also important to re-think the meaning of "innovation" in light of its side-effects on legacy systems that are already intelligible by a larger portion of participants. Innovation often introduces complexity and technical debts that are not only economic, but political, as they affect the safety of systems, their maintainability and the possibility of being appropriated by communities.

When feeling compelled to innovate, observe and ask everyone the question: does anyone really need to substitute what is already in use with something else? Before changing an algorithm we shall ask first how has it worked so far and why, using what languages, what sort of people operated it and which ethos they followed.

This is why we are maintaining and developing Devuan GNU+Linux as a base system for DECODE: a minimalist operating system that relies on mature standards. Most people using Devuan today, a large and growing community, do not refuse innovation per-se, but like freedom of choice and for them legacy systems are sometimes the best choice.

DECODE was funded by the European Union's Horizon 2020 Programme, under grant agreement number 732546.

DECODE was funded by the European Union's Horizon 2020 Programme, under grant agreement number 732546.